Hai Zhang | 张 海

Hi there!

I'm a first-year PhD student at The Multimedia Laboratory (MMLab) of The University of Hong Kong, jointly supervised by Prof. Hongyang Li and Prof. Fu Zhang.

I also serve as the head TA for the newly launched undergraduate course CCAI9025.

Prior to this, I earned both my Master's and Bachelor's degrees in Computer Science and Technology from Tongji University, where I had the privilege of being supervised by Prof. Junqiao Zhao.

My research interests focus on general reinforcement learning and its applications in embodied intelligence, i.e. robotic manipulation, vision-language navigation 🤖.

I firmly believe that reinforcement learning holds significant promise as a pivotal pathway toward achieving Artificial General Intelligence.

Previously, I was fortunate to collaborate with Prof. Lanqing Li and Prof. Pheng Ann Heng at The Chinese University of Hong Kong.

Currently, I'm working closely with Dr. Chuan Wen and Prof. Yang Gao at Tsinghua University to explore the generalization ability towards general robotic manipulation.

I'm always open to collaborations on reinforcement learning / robotics-related projects! Feel free to reach out —— I'd love to connect!👋.

Github

![]() Zhi Hu

Google Scholar

hai.zhang@connect.hku.hk

Zhi Hu

Google Scholar

hai.zhang@connect.hku.hk

-

Time: 2026.01~current -

Research Topic: Large Scale VLN Model

-

Time: 2024.06~current -

Research Topic: VLA-Based General Manipulation -

Supervisor: Prof. Yang Gao

-

Time: 2023.07~2024.05 -

Research Topic: Offline Meta Reinforcement Learning -

Supervisor: Prof. Lanqing Li and Prof. Pheng Ann Heng

ASTRO: Adaptive Stitching via Dynamics-Guided Trajectory Rollouts

Hang Yu, Di Zhang, Qiwei Du, Yanping Zhao, Hai Zhang, Guang Chen, Junqiao Zhao†, Eduardo E. Veas†

Under Review

TL;DR:

We propose ASTRO, a data augmentation framework that generates distributionally novel and dynamics-consistent trajectories for offline RL.

ASTRO first learns a temporal-distance representation to identify distinct and dynamically plausible stitch targets.

We then employ a dynamics-guided diffusion stitch planner via extrapolation discrepancies, defined as the gap between predicted and actual observations,

to adaptively stitch trajectories through model-based rollouts.

Paper • Code

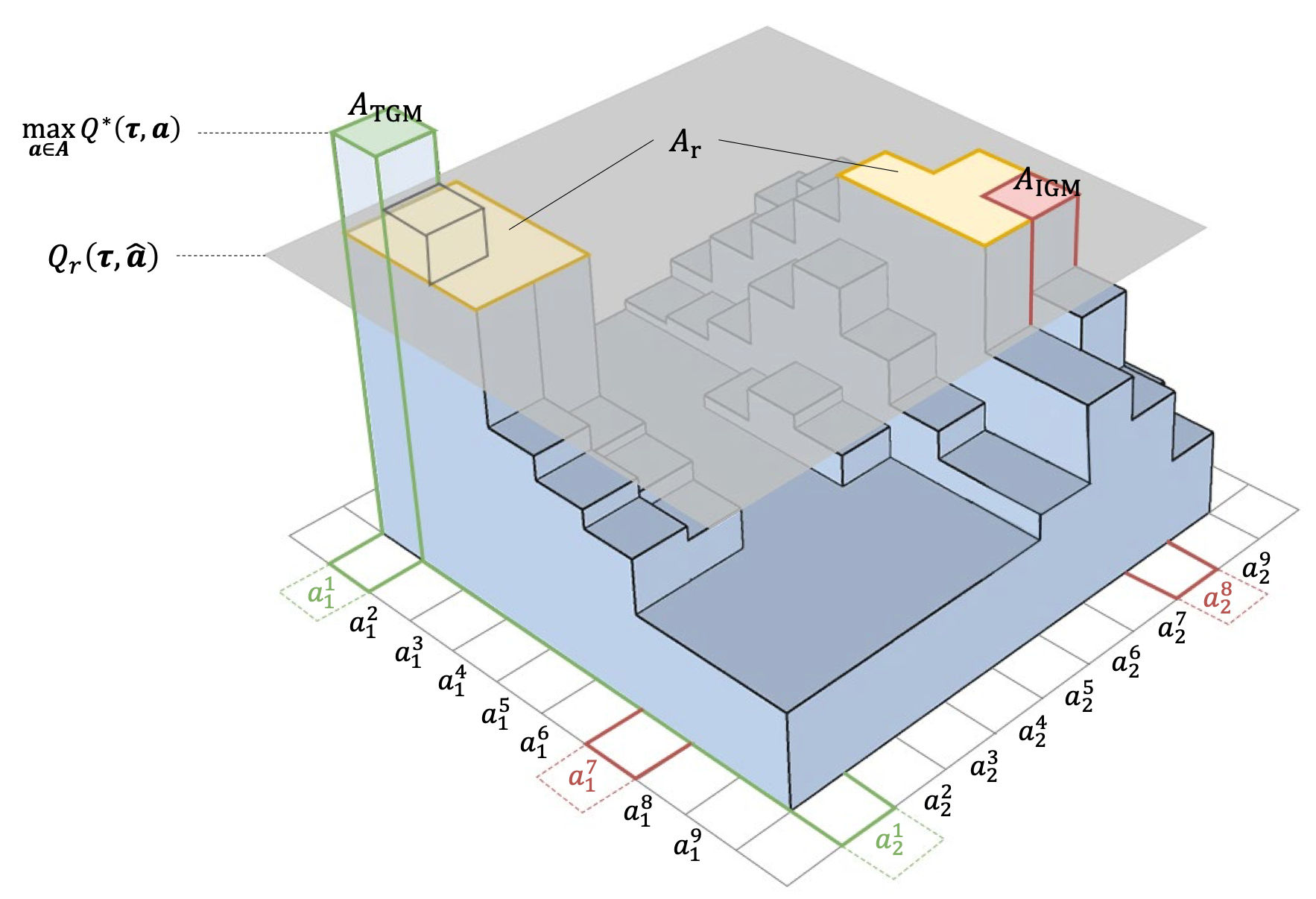

Potentially Optimal Joint Actions Recognition for Cooperative Multi-Agent Reinforcement Learning

Chang Huang*, Shatong Zhu*, Junqiao Zhao†, Hongtu Zhou, Hai Zhang, Di Zhang, Chen Ye, Ziqiao Wang, Guang Chen

International Conference on Learning Representations (ICLR) 2026

TL;DR:

We propose Potentially Optimal Joint Actions Weighting (POW), a method that ensures optimal policy recovery where existing approximate weighting strategies may fail.

POW iteratively identifies potentially optimal joint actions and assigns them higher training weights through a theoretically grounded iterative weighted training process.

We prove that this mechanism guarantees recovery of the true optimal policy, overcoming the limitations of prior heuristic weighting strategies.

Paper • Code

KineDex: Learning Tactile-Informed Visuomotor Policies via Kinesthetic Teaching for Dexterous Manipulation

Di Zhang, Chengbo Yuan, Chuan Wen, Hai Zhang, Junqiao Zhao, Yang Gao†

Conference on Robot Learning (CORL) 2025

★ Spotlight Presentation ★

TL;DR:

We propose KineDex, a hand-over-hand kinesthetic teaching paradigm in which the operator's motion is directly transferred to the dexterous hand,

enabling the collection of physically grounded demonstrations enriched with accurate tactile feedback.

KineDex can complete severely challenging tasks such as squeezing toothpaste onto a toothbrush, which require precise multi-finger coordination and stable force regulation.

KineDex collects data over twice as fast as teleoperation across two tasks of varying difficulty, while maintaining a near-100% success rate, compared to under 50% for teleoperation.

Paper • Code

•

• Website

• Overview

CERTAIN: Context Uncertainty-aware One-Shot Adaptation for Context-based Offline Meta Reinforcement Learning

Hongtu Zhou*, Ruiling Yang*, Yakun Zhu, Haoqi Zhao, Hai Zhang, Di Zhang, Junqiao Zhao†, Chen Ye, Changjun Jiang

International Conference on Machine Learning (ICML) 2025

TL;DR:

We propose CERTAIN to tackle context ambiguity and OOD issues in one-shot adaptation for COMRL by leveraging uncertainty-aware task representation learning and context collection.

Build upon heteroscedastic-like uncertainty estimation, our method can identify unreliable contexts and then lead to more robust policies.

Paper • Code

Scrutinize What We Ignore: Reining In Task Representation Shift Of Context-Based Offline Meta Reinforcement Learning

Hai Zhang, Boyuan Zheng, Tianying Ji, Jinhang Liu, Anqi Guo, Junqiao Zhao†, Lanqing Li†

International Conference on Learning Representations (ICLR) 2025

★ (Score: 6 6 8 8; top 5%) ★

TL;DR:

We unify the training scheme commonly used in COMRL with the general RL objective, filling the gap between intuition and theoretical justification.

We identify the new issue called task representation shift and theoretically demonstrate that by reining in the previously ignored task representation shift, it is possible to achieve monotonic performance improvements.

Paper • Code

•

Towards an Information Theoretic Framework of Context-Based Offline Meta-Reinforcement Learning

Lanqing Li*, Hai Zhang *, Xinyu Zhang, Shatong Zhu, Junqiao Zhao†, Pheng-Ann Heng

Annual Conference on Neural Information Processing Systems (NeurIPS) 2024

★ Spotlight Presentation (Score: 7 7 8; top 1%) ★

TL;DR: We propose a novel information theoretic framework of the context-based offline meta-RL paradigm,

which unifies the mainstream COMRL methods, then leads to a general and state-of-the-art algorithm called UNICORN,

exhibiting remarkable generalization across a broad spectrum of RL benchmarks, context shift scenarios, data qualities and deep learning architectures.

Paper • Code

•

• Overview

Focus On What Matters: Separated Models For Visual-Based RL Generalization

Di Zhang, Bowen Lv, Hai Zhang, Feifan Yang, Junqiao Zhao†, Hang Yu, Chang Huang, Hongtu Zhou, Chen Ye, Changjun Jiang

Annual Conference on Neural Information Processing Systems (NeurIPS) 2024

TL;DR: We propose SMG, which utilizes a reconstruction-based auxiliary task to extract task-relevant representations from visual observations

and further strengths the generalization ability of RL agents with the help of two consistency losses.

Paper • Code

•

How to Fine-tune the Model: Unified Model Shift and Model Bias Policy Optimization

Hai Zhang, Hang Yu, Junqiao Zhao†, Di Zhang, Chang Huang, Hongtu Zhou, Xiao Zhang, Chen Ye

Annual Conference on Neural Information Processing Systems (NeurIPS) 2023

TL;DR:

We theoretically derive an optimization objective that can unify model shift and model bias and then formulate a fine-tuning process,

adaptively adjusting model updates to get a performance improvement guarantee while avoiding model overfitting.

Paper • Code

•

Safe Reinforcement Learning with Dead-Ends Avoidance and Recovery

Xiao Zhang, Hai Zhang, Hongtu Zhou, Chang Huang, Di Zhang, Chen Ye†, Junqiao Zhao†

IEEE Robotics and Automation Letters (RA-L)

★ Oral in IEEE International Conference on Robotics and Automation (ICRA) 2024 ★

TL;DR:

We propose a method to construct a boundary that discriminates between safe and unsafe states.

The boundary we construct is equivalent to distinguishing dead-end states, indicating the maximum extent to which safe exploration is guaranteed, and thus has a minimum limitation on exploration.

Paper • Code

•

Some of the competitions and projects.

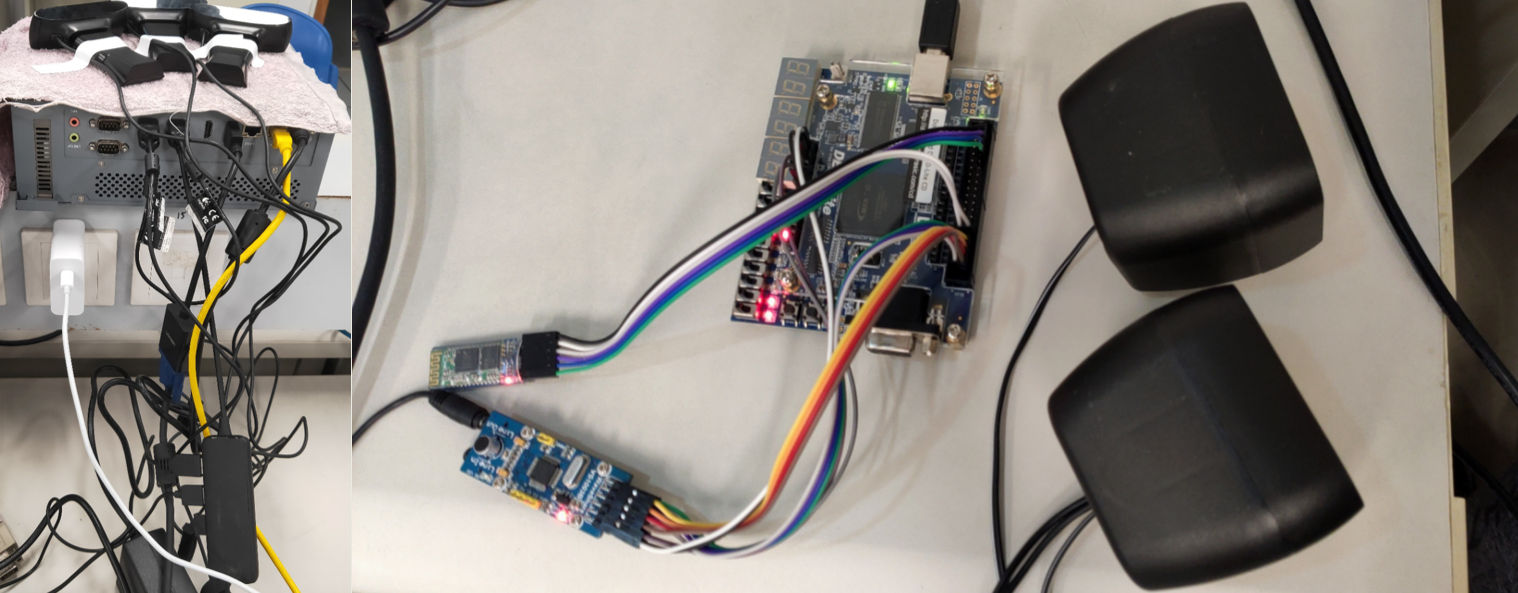

Unknown Environment Exploration and Application Device Based on DRL |

National Second Prize in |

Invention Patent in submitted: Distributed Vehicle Cloudization |